3.4 SIA Multi-Agent Platform

The SIA Multi-Agent Platform is a low-code environment for developing distributed Agents, deeply integrating blockchain technology with the Agent protocol stack, providing full lifecycle management from application design to production deployment. Its core goal is to empower developers to quickly build highly reliable and scalable distributed applications through visual orchestration, smart contract integration, and a decentralized runtime environment.

3.4.1 Visual Development Suite

The SIA Multi-Agent Platform is an Agent development and collaboration platform based on the SIA protocol, providing developers and users with an efficient and convenient system for building and using Agents. The SIA Multi-Agent Platform has powerful search and dialogue engines, as well as a multi-Agent collaborative Swarm module, which can automatically coordinate the work between multiple Agents, enabling the processing of tasks from simple to complex.

The visual development suite includes the following modules: Visual Orchestrator, Smart Contract Editor, Data Model Designer, Protocol Adapter, Debugging Simulator, Deployment Management Center, Monitoring and Analysis Panel, AI-assisted Development, Permission Control Center, Plugin Extension Market, API Gateway, Blockchain Integration Module, Multi-tenant Support, Automated Testing Framework, Resource Scheduling Engine, etc.

3.4.1.1 Visual Orchestrator

- Flowchart designer, supports drag-and-drop construction of Agent interaction logic, supports conditional branching and parallel tasks; built-in preset nodes; real-time syntax validation and error prompts;

- Multi-view switching, displays complete logic flow topology (design view); highlights current execution path (debugging view); overlays real-time performance indicators (monitoring view);

3.4.1.2 Smart Contract Editor

- Multi-language support, including Solidity (ETH)/Rust (Solana), automatic code generation based on logic flow description;

- Formal verification, static analysis of contract security, simulated execution to verify business logic correctness;

- On-chain debugging, visualization of transaction execution traces, state snapshot comparison analysis;

3.4.1.3 Data Model Designer

- Visual Schema definition, supports Protobuf/JSON Schema/Avro, automatic generation of type validation code;

- Semantic constraints, natural language rule description and dynamic validation policy configuration;

- Version migration tool, smooth transition of data structure changes, automated data migration script generation;

3.4.1.4 Protocol Adapter

- Protocol conversion engine, achieves HTTP to SIA custom protocol conversion through Token injection and routing mapping;

- Dynamic routing table, intelligent routing based on service tags, grayscale release strategy configuration;

3.4.1.5 Debugging Simulator Sandbox

- Multi-node cluster simulation, supports 100+ virtual nodes running in parallel, network partitioning/latency simulation; traffic injection tool, anomaly injection (timeout/error code), stress test script recording;

- Distributed tracing, full-link log correlation analysis, vector clock alignment display;

3.4.1.6 Deployment Management Center

- One-click deployment, generates Docker/K8s/Helm deployment solutions, automatic registration of blockchain nodes;

- Progressive release, traffic splitting strategy and automatic rollback mechanism;

- Cross-chain deployment, multi-chain smart contract synchronization, automatic establishment of state channels;

3.4.1.7 Monitoring and Analysis Panel

- Real-time dashboard, Agent health status heatmap and protocol interaction throughput trends;

- Root cause analysis, automatic clustering of abnormal patterns and transaction temporal dependency analysis;

- Cost analysis, resource consumption prediction and smart contract gas fee estimation.

3.4.2 AI Development Support

The platform provides a built-in tool library and also supports external users to build and publish tools, open to all users across the network. The integration of different types of tools with user-defined Agents can greatly expand and strengthen the capabilities of created Agents.

3.4.2.1 Common Tool Library

The common tool library provided on the platform includes: - Database: Used to store and retrieve large amounts of information, such as knowledge graphs, relational databases, etc.; - Natural Language Processing tools: Such as lexical analyzers, syntactic analyzers, named entity recognition tools, etc., to better understand and process natural language; - Machine Learning frameworks: Such as TensorFlow, PyTorch, etc., used to train and run machine learning models; - Search engine: Helps quickly find relevant information; - Data analysis tools: Used to process and analyze data, such as Excel or professional data analysis software; - Knowledge inference engine: Assists in logical reasoning and knowledge derivation; - Visualization tools: Display data and results in an intuitive form; - Task-specific tools: Depending on the specific application scenario, image recognition tools, speech recognition tools, etc., may be used; - API interface: Interact and integrate with other systems or services; - Rule engine: Define and execute specific rules and strategies.

3.4.2.2 AI-Augmented Development Toolkit

In addition, the platform also provides AI-Agent development toolkit for AI Agent development: - Reinforcement Learning frameworks: Such as OpenAI Gym, Stable Baselines, etc., used to develop reinforcement learning-based Agents; - Model evaluation tools: Used to evaluate the performance of AI models, such as accuracy, recall, and other metric calculation tools; - Code generation tools: Help quickly generate code snippets, improving development efficiency; - Automated testing tools: Perform automated testing on developed AI Agents; - Data annotation tools: Used to annotate data, providing accurate annotation information for training; - Model compression tools: Help compress model size for easier deployment and operation; - Distributed computing frameworks: Used for large-scale data processing and model training; - Monitoring tools: Real-time monitoring of AI Agent’s operating status and performance; - Version control tools: Manage different versions of code and models; - Ethical evaluation tools: Ensure that the development and use of AI Agents comply with ethical norms.

3.4.3 Protocol Compatibility Engine

The SIA developer platform’s protocol compatibility engine deeply integrates with A2A protocol and intelligently adapts with MCP Server, building seamless cross-system and cross-protocol collaboration capabilities. This solution has been implemented in various industries such as finance and gaming, significantly reducing system integration costs and improving cross-system collaboration efficiency.

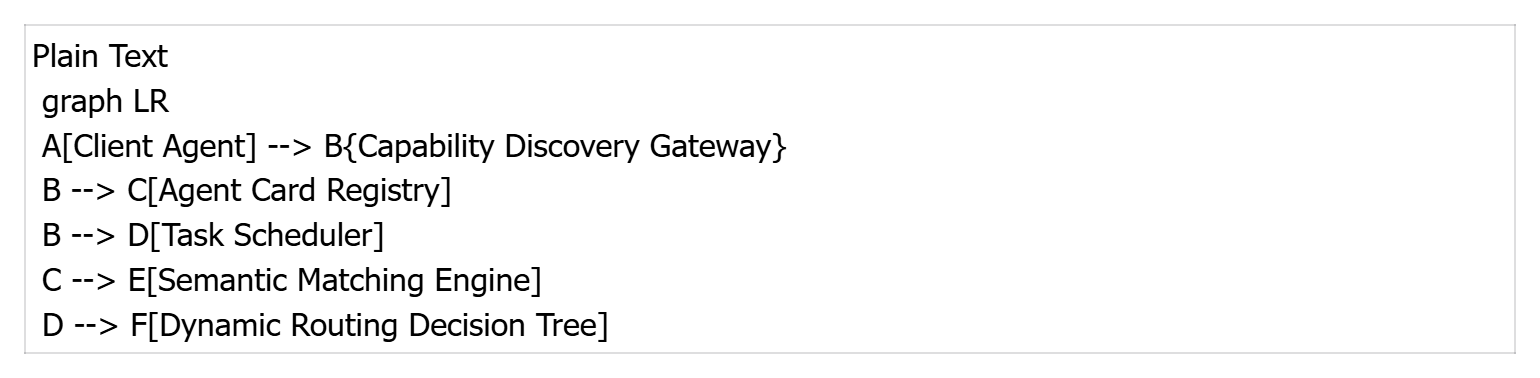

3.4.3.1 A2A Protocol

The Agent collaboration engine supports capability discovery and dynamic routing based on AgentCard, providing multi-modal communication support (text/audio/video streams), enabling task decomposition and result aggregation for cross-platform Agents. The A2A protocol engine adopts a layered decoupled architecture, including three core modules: - Capability Discovery Layer: Dynamically declares Agent capabilities through AgentCard (JSON-LD format metadata), supports semantic tag matching (e e.g., “capability”: “order_processing”); - Task Orchestration Layer: DAG (Directed Acyclic Graph) based task flow engine, supports parallel task splitting and dependency definition; - Collaboration Communication Layer: Integrates HTTP/2 and SSE protocols, achieving bidirectional streaming communication and state synchronization.

Provides cross-Agent collaboration processes, including: - Capability discovery: Obtain target Agent capability set through GET /capabilities interface; - Task distribution: Generate task shards based on capability tags; - State synchronization: Push progress updates through SSE event stream (e.g., {“status”: “processing”, “processing”: 65}); - Result aggregation: Merge subtask results using MapReduce model.

3.4.3.2 Native MCP Protocol Support

The SIA platform has built-in MCP standard interfaces, adopting a dual-mode (REST/GraphQL) protocol adaptation to build a tool invocation framework: - REST mode: Implements standardized interface definition based on OpenAPI 3.0 specification, supports OAuth2.0/JWT dual authentication mechanism; - GraphQL mode: Built-in Schema Definition Language support (SDL), provides type-safe query and mutation operations;

Supports dynamic loading of external tool description files (JSON Schema), including Schema hot updates, by monitoring changes in the /tools directory, automatically parsing JSON Schema files, supports Schema version control (SemVer specification); provides context-aware caching optimization mechanisms, based on Redis’s secondary caching architecture, dynamic eviction policy (LRU+TTL combination).

MCP Server adopts a microservice architecture, with core components including: - Tool Service Gateway: Dynamically loads external tool description files, supports OpenAPI/Swagger 3.0 specification parsing, automatically extracts parameter validation rules and error message templates; - Context Manager: Maintains long-term memory of LLM sessions (using Redis streaming storage), ensures data eventual consistency through cross-node state synchronization based on Redis Pub/Sub; - Resource Scheduler: Elastic scaling based on K8s, supports automatic scaling for sudden traffic (e.g., CPU > 70% triggers scaling), achieves intelligent load balancing through weighted round-robin + minimum connections.

3.4.3.3 A2A and MCP Collaborative Architecture

The collaborative architecture consists of users, a main scheduling Agent (Orchestrator), subtask Agents (Agent1, Agent2, etc.), the MCP server, and external systems. These components interact through specific protocols to achieve collaborative processing of complex tasks. Key technologies include protocol conversion middleware and intelligent routing engines. Protocol conversion middleware includes: A2A-MCP converter, which automatically extracts task parameters, generates MCP request bodies, and handles semantic differences between different protocols, for example, mapping A2A’s priority to MCP’s timeout, achieving smooth protocol conversion; and MCP-A2A adapter, which converts tool responses into A2A standard event format, supports streaming data sharding transmission, and SSE block size is configurable to meet data transmission requirements in different scenarios.

The user initiates a composite task request, and the main scheduling Agent receives it, parses the natural language requirements, converts them into a structured task description in JSON-LD format, and distributes the task to subtask Agent1 via the A2A protocol (using HTTP/SSE). Agent1 receives the task and, according to the A2A protocol, calls the MCP server. The call process follows the JSON-RPC protocol. The MCP server further interacts with external systems, obtaining data through database queries or API calls. The external system returns structured data to the MCP server, which then encapsulates the data as an MCP response and feeds it back to Agent1. Agent1 returns the task execution result in A2A standard event format to the main scheduling Agent. The main scheduling Agent triggers the next stage of the task based on task progress, such as assigning the task to Agent2. Agent2 also interacts with the MCP server via the A2A protocol. The MCP server can call multiple tools in parallel and perform distributed queries with multiple external systems. After aggregating multi-source data, it returns the combined response to Agent2. Agent2 finally submits the result to the main scheduling Agent, which generates a standardized deliverable and feeds it back to the user.

Task Triggering and Decomposition: - A2A Protocol function: The main scheduling Agent receives user requests through the /tasks/send interface, parses the received natural language requirements, and converts them into a structured task description in JSON-LD format; - MCP Protocol preparation: The tool registration center verifies the supplier_api’s OpenAPI specification, dynamically loads Swagger documents based on the specification, thereby generating parameter validation rules, preparing for subsequent tool calls.

MCP Server Execution Process: - Protocol conversion: Converts A2A’s tool_call to MCP standard request; - Security verification: Uses JWT token verification combined with IP whitelist filtering to ensure the legality and security of calls; - Parallel execution: For tool calls with no dependencies, multi-threading is initiated to improve execution efficiency.

Data Aggregation and State Synchronization: - A2A state management: Uses the DAG state machine to track task progress, pushes intermediate results to collaborative Agents in real-time; - Exception handling mechanism: Includes automatic retry, circuit breaking, and compensation transactions.

Result Integration and Delivery: - Cross-Agent data fusion: Uses Schema-on-Read technology to unify heterogeneous data formats for subsequent processing and analysis; - Automatic generation of visual reports: Can automatically generate visual reports in various formats, such as PDF, Markdown, Table, etc., with report content generated according to deliverable specifications.

3.4.4 Extension Modules

3.4.4.1 Blockchain Distributed Database: Infinitely Scalable Data Service Capability

SIA provides users with easy-to-use data storage and data calling services based on smart contracts and encryption technology. The underlying layer adopts a blockchain distributed database structure based on Merkle trees, which can efficiently verify the integrity of large amounts of data by only verifying the hash values of a small number of nodes. Compared to storing the hash values of all data, it can greatly save storage space.

Each node stores complete or partial blockchain data locally, including all blocks, transaction records, etc. At the same time, it is classified and stored according to different data types and functions, such as block data, account information, smart contract code, etc.

The platform provides a complete data synchronization mechanism to ensure data consistency and integrity. - New block broadcast: When a node generates a new block, it broadcasts it to other nodes in the network; - Verification and reception: Other nodes receive the new block and perform validity verification, including transaction legality, hash value calculation, etc. If verification passes, the block is received and stored; - Request missing data: If a node finds that it is missing certain historical blocks, it can send a request to other nodes to obtain the missing data; - Synchronization frequency adjustment: Dynamically adjusts the frequency and priority of data synchronization based on network conditions and node performance; - Data repair: If data inconsistency or corruption is found during synchronization, it is repaired through interaction with other nodes; - Consensus-driven: The synchronization process is carried out on the basis of reaching consensus, ensuring that the final data state of all nodes is consistent.

3.4.4.2 Distributed Consumer-Grade Computing Power Network

SIA provides an elastic, flexible, and efficient distributed consumer-grade computing power network for upper-layer applications. Through task segmentation and optimization, distributed collaboration, dynamic resource allocation, scheduling algorithms iteratively optimized based on SIA, adaptation to different types of computing hardware, and effective data caching and pre-processing, SIA Blockchain can effectively solve computing performance problems while providing distributed computing power.

Potential computing nodes join the computing power network by installing the SIA Protocol program. The SIA Protocol is responsible for the management and operation of the entire computing power network: - Task management: Receives and manages computing tasks assigned from SIA Agents, including operations such as task download, start, pause, resume, and submission; - System monitoring: Real-time monitoring of computer hardware status, such as CPU/GPU usage, memory occupancy, temperature, etc., to adjust the execution of computing tasks based on system conditions; - Resource allocation: Can adjust the amount of computing resources allocated to the Agent based on user settings or automatically, such as limiting CPU/GPU usage ratio; - Data transmission: Responsible for data interaction with the server, including uploading and downloading task data; - Statistical information display: Displays various statistical data of node participation in the project, such as the number of completed tasks, contributed computing power, and earned points, allowing nodes to understand their contributions; - Project selection and joining: Allows nodes to browse available Agents and select Agent tasks of interest to join; - Notifications and reminders: Provides notifications and reminders about task status, Agent updates, etc.; - Settings adjustment: Nodes can personalize various parameters of the SIA Protocol, such as network connection, resource allocation strategy, etc.; - Fees and billing: Displays dynamic fees for different resources to users and nodes, uses “bidding matching” based on rules to achieve optimal matching, provides real-time billing capabilities, and automatically completes fee settlement involving multiple parties.